Standard algorithms and execution policies

What are execution policies?

C++17 has brought some interesting additions to STL algorithms. One of which are execution policies. This is a set of tags that some STL algorithms accept as first argument (there’s an additional overload provided), instructing how container elements will be accessed and processed. C++17 added three distinct execution policies and one more came along with C++20. As of now, the list contains the following:

class sequenced_policy,class parallel_policy,class parallel_unsequenced_policy,class unsequenced_policy.

Terms

The description of these policies can be a bit intimidating and unfamiliar at first glance. Let’s take the description for parallel_policy:

The execution policy type used as a unique type to disambiguate parallel algorithm overloading and indicate that a parallel algorithm’s execution may be parallelized. The invocations of element access functions in parallel algorithms invoked with this policy (usually specified as std::execution::par) are permitted to execute in either the invoking thread or in a thread implicitly created by the library to support parallel algorithm execution. Any such invocations executing in the same thread are indeterminately sequenced with respect to each other.

… and parallel_unsequenced_policy:

The execution policy type used as a unique type to disambiguate parallel algorithm overloading and indicate that a parallel algorithm’s execution may be parallelized, vectorized, or migrated across threads (such as by a parent-stealing scheduler). The invocations of element access functions in parallel algorithms invoked with this policy are permitted to execute in an unordered fashion in unspecified threads, and unsequenced with respect to one another within each thread.

… as an example.

There’s a set of interesting terms appearing in these two, particularly:

- element access function

- parallel execution

- vectorisation

- parent stealing scheduler

- indeterminately sequenced invocations

Element access function

This is simply a fancy name for algorithm’s callback applied on elements within the range.

Parallel execution

This one is pretty simple as well. It just means that the aforementioned element access function, will be applied to many elements of the provided range at the same time. The description mentions “implicitly created thread” (or possibly a thread pool) on which the element access function will run in parallel to speed up the computation.

Vectorisation

Scalar operations can be generalised to perform operations on an entire arrays and this is exactly what this term is referring to. Consider Python:

|

|

From the programmer’s perspective this is a vectorised multiplication. We have no idea how it was performed though. Maybe the programming model and the hardware allows for completing this operation in parallel, maybe there’s a dedicated SIMD instruction that can calculate the result in one step. It doesn’t really matter as long as the result is correct.

In context, of parallel_unsequenced_policy, vectorisation means that element access function may be applied to an entire range at once.

Parent stealing scheduler

The wiki page describes this term in details. Broadly speaking, this means that some parts of the element access function may execute on another thread.

Indeterminately sequenced invocations

This one is simple as well. From the perspective of a single thread, the element access function will be called multiple times in sequence. The order is not defined though. So, if the range comprises elements i.e. [1,2,3], a given thread may run element access function with element 3 first, then 1 etc. The order is arbitrary and not guaranteed.

Examples

So, what can we do with it? Majority of STL algorithms support execution policies now. Let’s try a simple example:

|

|

This program loads a text file containing a list of words. One word per line. It’ll convert these lines to upper case using std::transform. For the purpose of testing, I’ve prepared a rather large file (about 1GB). It’s simply a /usr/share/dict/british-english dictionary concatenated a couple of times to make it bigger. In total it’s about 100M lines:

[tomasz@ymir ~]$ ls -lh vvvvbig

-rw-r--r-- 1 tomasz tomasz 941M Aug 13 10:44 vvvvbig

[tomasz@ymir ~]$ wc -l vvvvbig

101972800 vvvvbig

After building the program with both std::execution::seq and std::execution::par you’ll notice that… there’s virtually no difference in performance :D. Here are the results:

[tomasz@ymir ~]$ ./seq vvvvbig

got 101972800 line(s)

time measured: 5074 ms

[tomasz@ymir ~]$ ./par vvvvbig

got 101972800 line(s)

time measured: 5014 ms

GDB reports only a single thread running (of course I’ve stopped execution during actual transformation stage, not during the input reading) in case of par version:

|

|

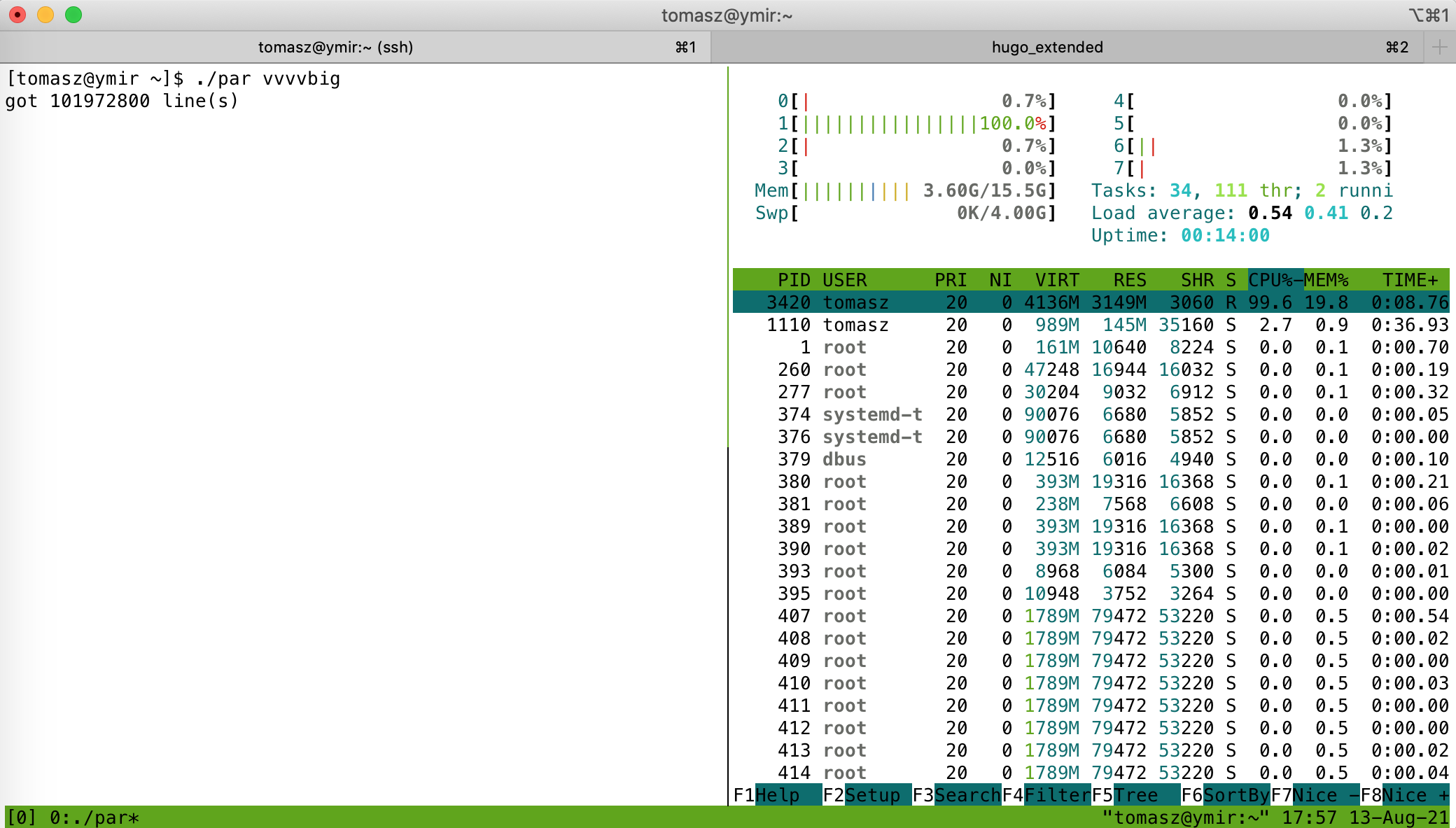

In htop only one CPU is busy doing actual work:

I was surprised by that as well. I was wondering what am I missing? After playing with various combinations of gcc flags I gave up and started googling. It seems that Intel threading building blocks library is required to enable parallel execution. After installing the necessary package:

pacman -S tbb (on arch)

or

apt install libtbb-dev (on debian)

and rebuilding the program:

g++ -std=c++17 uppercase.cpp -ltbb -o par

I finally got it working.

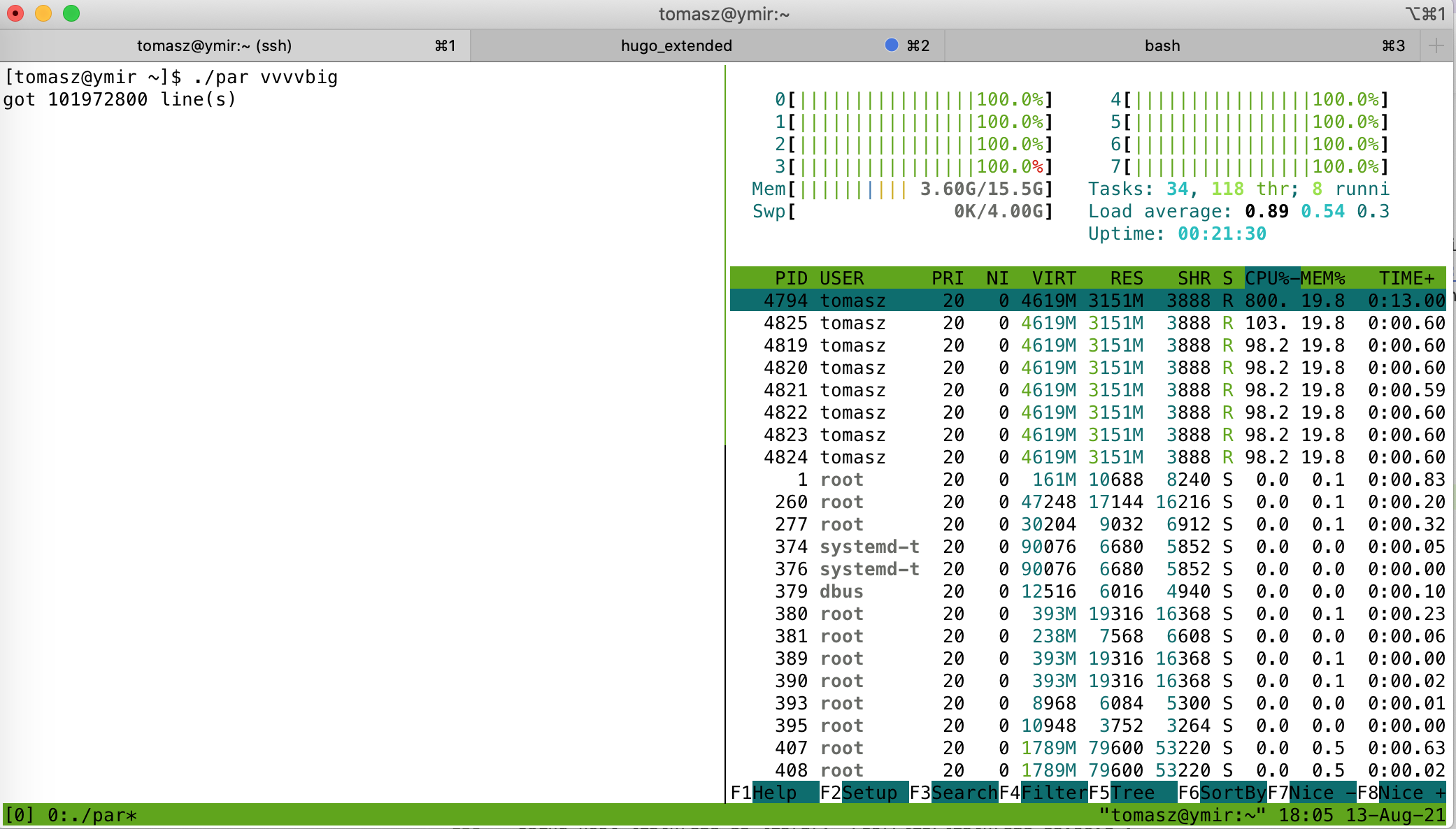

The load is spread nice and even on all cores:

In gdb it’s also visible that new threads are spawned to support parallel execution:

|

|

The performance improvement is dramatic:

[tomasz@ymir ~]$ ./par vvvvbig

got 101972800 line(s)

time measured: 751 ms

This is almost 7x faster than the seq version.

Advantages

- Simplicity. Without having to design your own parallel execution environment it’s easy to benefit from parallel execution. All the details, nooks and crannies are abstracted by STL and as a programmer you don’t have to worry about them,

- Convenience. Within a matter of seconds it’s possible to verify a hypothesis and check if given computational problem will benefit from parallel execution or not,

- Parallelize everything. Since it’s super easy to make one thing run in parallel, it becomes even easier to manage a lot more tasks in parallel fashion,

- Feels modern. This one is very subjective. For me, it feels like a fresh breath. The language feels modern and gives an impression that you can manage resources better and there are built in primitives to achieve that.

Disadvantages

- Too simple. Sure, it’s nice to have a simple solutions like that but a greater level of control would be appreciated. No control of which thread(s) the work is gonna be delegated to is a bit disappointing, considering that we’re talking about a language with heavy traditions on giving you knobs to control everything,

- Opaqueness. Similar point as the first one. It may be necessary to understand how and when threads are allocated and what are the scheduling policies. It’s unclear what’s the locking model as well. Without greater transparency in that regard, I have a feeling that this is gonna be only used in very simple cases. Not sure what the support on ARM or other platform will be/is as well,

- Portability (or lack of it?). It may occur that you have to rewrite half of your code using i.e.

std::execution::parbecause you know that your destination platform doesn’t implement it at all. In such case in order to benefit from parallel execution you’d have write your own parallel framework yourself. In other words, current execution policies semantics are not guaranteeing anything. - 3rd party libs. This one is a bit subjective as well. C++ starts to rely heavy on 3rd parties to complete its ecosystem. All of a sudden we need support from build systems to support modules since the language doesn’t come with the committee maintained tooling for that and same applies to execution policies. It has been left to implementations to either support them or not. The standard doesn’t guarantee anything and the language doesn’t come with any implementation on a per platform basis by default.