Experimenting with parser combinators in non-functional languages

Functional parsing and parser combinators

Recently, on my routine round through YouTube and social media, I’ve came across a video from Ashley Jeffs regarding a message broker he’s the author of called Benthos. Benthos itself is very interesting and I recommend to learn more about it, but what especially caught my interest was a different video from Ashley, regarding bloblang - a configuration language for Benthos written using parser combinators. Frankly, this was the first time I’ve heard that term and, intriguing as it sounds, I wanted to learn all about it. That took me through another rabbit hole, which is, I think, the original paper that defined the whole notion of parser combinators by Graham Hutton and Erik Meijer. Copy of this document can be found here.

What are parser combinators?

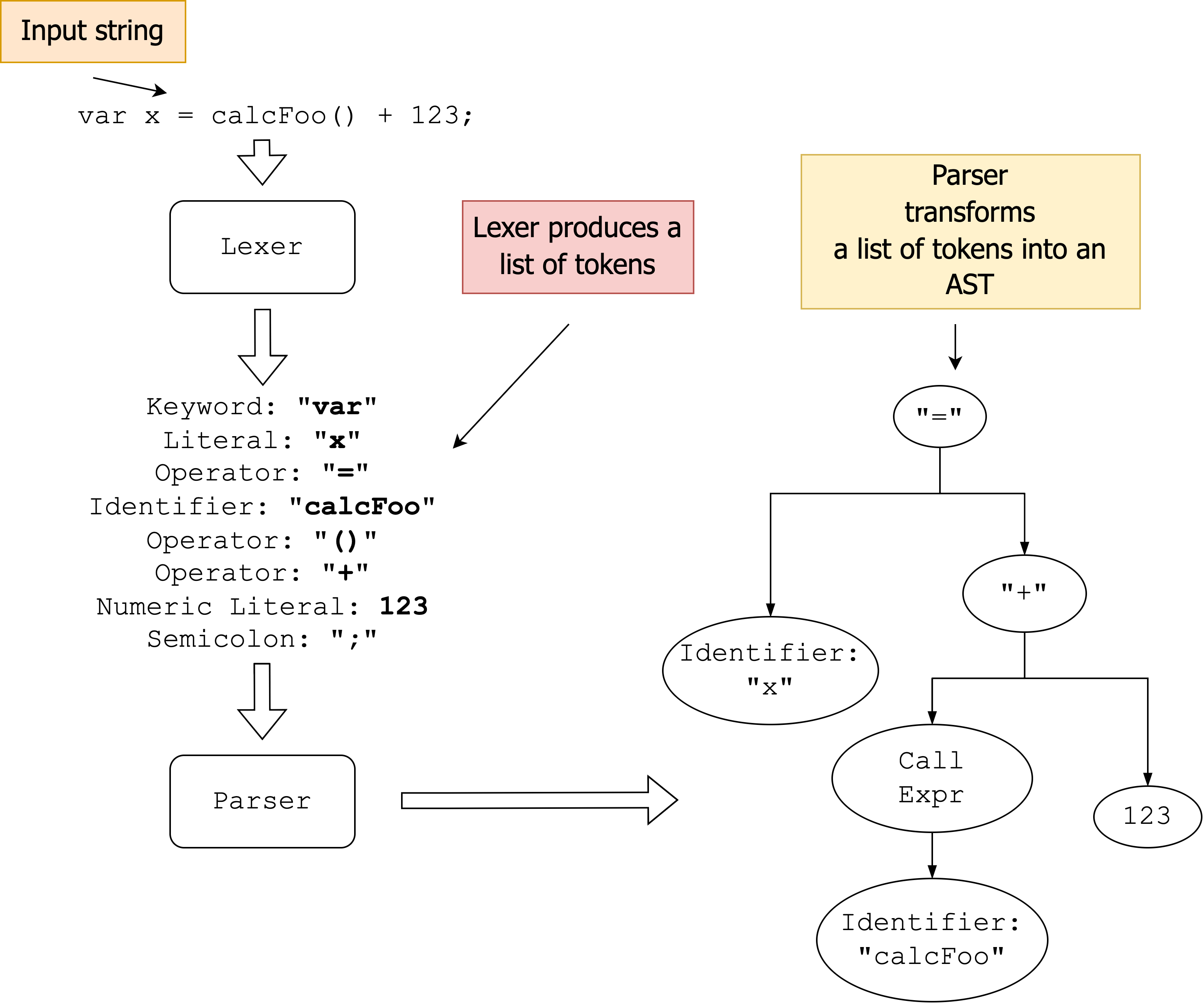

In short, parser combinators is a functional approach to parsing. As vague as it sounds, that sentence best describes the idea. Traditionally, parsing is performed in a series of steps and the output is either translated into an abstract syntax tree that can then be interpreted (in case of interpreters) or transformed into an intermediate representation that can be translated to a bytecode or a machine language (I’m completely ignoring other necessary steps like type checking, optimisation etc). This process requires a lexer - converting the input string into a stream of tokens comprising a language and the parser itself, operating on that tokens - checking if the sequence they form is compliant with the grammar. In simplest fashion this can be depicted as:

Right, still though, what are parser combinators and how does the whole process differs?

Parser combinators is an idea where set of bare minimum, fundamental parsers is created, specialising in parsing a specific construct. These parsers are then combined to construct parsers capable of processing a more complex structures.

This means that instead of defining a lexer and a parser operating on tokens it produces, you build a set of small parser operating on input string directly. You’ll have parsers recognising trivial constructs like:

- words,

- single characters,

- keywords,

- whitespaces,

- …

and you’ll combine these together to create a parser for a more complex constructs.

What I’ll build?

It’s really difficult to explain that without actually implementing them. So, please bear with me as I’ll try to implement a small library of fundamental parsers in both Python and C++, trying to explain the concept of parser combinators as I go. My goal is to be able to parse something non-trivial and my arbitrary choice is the INI file format. Let’s dive in!

Monadic parser combinators

I’ll start with walking through the aforementioned document describing the notion of parser combinators trying to recreate the ideas described there in an imperative language. I’m gonna start with Python as it’s a perfect language for prototyping.

The paper starts with a definition of a parser type:

|

|

This can be translated to C++ as:

|

|

This is quite verbose! The semantics is that Parser is a function template. Function, that consumes input string and returns a list of parsing results and the remaining, unparsed input string. For example:

Given an input string:

|

|

…and a parser parsing words, the expected result would be:

|

|

Any given parser can either succeed or fail. The parser indicates failure by returning an empty list. For example:

|

|

Next, the paper defines two most fundamental (and most important as well, as

we’ll later find out) parsers. These are result and zero parsers.

The first one, always succeeding and the second one, always failing. Let’s

have a look on the Haskell implementation first:

|

|

This looks a bit magical at first glance to people not acquainted with Haskell,

also the names are a bit unfortunate as well. As the paper clarifies the \inp -> ... syntax defines a lambda function taking input inp and returning an

array containing one element, a tuple of (v, inp). I must admit I was

initially confused because I assumed that result v is, similarly to Parser a a template but it’s not, these functions translate to Python as:

|

|

Next parser that the paper describes is the item parser - a parser that

parses a single character from the given input string or fails if the string is

empty. In python, this will look the following way:

|

|

Combining parsers together

The most important concept behind parser combinators is the ability to “glue”

them together to construct a parser for more complex constructs. This is where

the bind operation comes into play. It’s essential to understand how it

works and what it does. Quoting the paper, here’s the Haskell definition:

|

|

bind is a function that takes two arguments: first Parser a and second, a

a function that takes a and returns Parser b. bind itself returns Parser b.

This is a bit tricky to understand initially but it’s quite easy after a

moment. Simply speaking, bind takes a Parser and a “converter” function

that is able to convert a (the parsing result from Parser a) into Parser b. Again, I must admit, it took me a while to understand what bind really

does. Most of the difficulty lies really in deciphering Haskell’s syntax. Python

equivalent is, in my opinion, a lot easier to understand:

|

|

I’ve called the a -> Parser b function a binder. This function takes the

parsing results from the first parser and returns a parser of Parser b type.

This latter parser is then invoked on the remaining input string (as returned

by first parser). The loop is only to comply with the established semantics

which suggests that there can be more than one valid parsing result.

That’s all the building blocks required. With that in hands, it’s possible to

construct more interesting parsers. Let’s focus on how bind can be used

because understanding what it does is absolutely essential. The first

parser that the paper mentions is the sat parser. It’s a Parser Char

parser, meaning the parsed result is a character. It takes a predicate and

parses a single character from the input string if the predicate is true.

Here’s the Haskell code for reference:

|

|

… and equivalent Python implementation:

|

|

The “binder” function in this case is a lambda taking a single character (the

result of item parser) and returning either a result or zero

parser if the predicate stands or not. The argument to the binder function

is always a result from the first parser - it’s important to bear that in mind.

In other words what “binder” function does, is it takes first parser’s result

and returns a parser of type Parser b. Hopefully, that makes sense?

One “combinator” is needed before I’ll proceed with implementation of the remaining fundamental parsers. In the paper, it’s named plus - it executes two given parsers on the same input and combines their results. Here’s the Haskell code:

|

|

Personally, I don’t like that name and I prefer to call this function “anyof”. Here’s the implementation in Python:

|

|

With that in mind, I’ll implement the rest of the parsers mentioned in the paper. Here they are:

|

|

Some names may be slightly different than in the original paper, but majority is “pythonized” quite verbose.

That’s all cool, I’m now able to parse characters of all sorts but still I can’t parse any strings. This can be sorted out by combining the character parsers recursively. The Haskell definition in the paper for parsing words is quite enigmatic (at least to me):

|

|

Without knowing Haskell syntax it’s difficult to grasp it. To understand this syntax fully, here’s a couple of hints:

Sequence packing/unpacking

(x:xs) construct is used to express sequence packing/unpacking. When used on the left hand side:

|

|

It can be used to concatenate sequences as well (and this is exactly how word parser uses it):

let x = 'H'

let xs = "ello"

let s = (x:xs)

-- s is now: "Hello"

where expression

This is used to conveniently defer the declaration of an identifier. In case

of word parser, it would be difficult to employ tail recursion without

resorting to where. So, what it does in this context is that it gets

evaluated first before the neWord 'plus' result "" expression.

What happens in the recursive call? First, let’s analyse the base case. Both

neWord and result "" are of type Parser String. If the input string is

empty, the letter parser will fail and thus terminate the recursive descend

so, only the result "" will matter.

Now, the recursion itself. The first “binder” lambda collects a character

parsed by a letter parser. The “binder” now has a character but it has to return a

Parser String type so, it performs another bind inside where a recursive

call happens in which a single character stored in x is combined with the

string parsed as result of the recursion (and stored in y). The results are

combined to a string and returned via a result parser to form the effective

type of Parser String. This can be expressed in Python the following way:

|

|

This is pretty cool - we can now parse words! This abstraction can be (and

should be) lifted though. In general, the word_parser is applying

the same parser over and over again until parsing fails. Having a parser like

that, that can apply any other parser until the latter fails is very handy.

You can imagine it may be useful to construct a parser parsing not only words

(which are sequences of characters) but i.e. sentences (which are sequences of

words) or any other repeating constructs. Let’s start abstracting this

implementation. First thing, is to extract the embedded parser into an argument:

|

|

Now, there’s a bit of a problem with that since the result "" only applies as

the initial value (returned in recursive base case) for strings. I’ll extract

that as well:

|

|

There’s one last change required. The act of combining partial results is currently hard-coded and specific to strings only. I’ll extract that into an argument as well and call that function a “reducer”:

|

|

Now, the implementation of make_word_parser can be expressed the following

way:

|

|

This is great but it’s not perfect yet, in case of strings, I currently parse words comprised of alphabetic characters but what if I’d want to parse all alphanumeric characters as words or include other character classes? It seems like it does make sense to inject the parser as well:

|

|

I’m almost ready to parse the Ini files. There’s one more thing very common

when lexing that needs to be sorted out - white characters. Most of the time

these needs to be ignored. They can be of arbitrary amount as well. For that

purpose, I’ll introduce:

|

|

This parser wraps a given parser and always succeeds. If the wrapped parser

fails it’ll return [(None, input)], if it succeeds it will just forward the

results - this is crucial since bind_parser parsing chain is broken on first

encountered parse failure and I want to have a way to avoid that.

Two more parsers which happen to be very handy are

|

|

Both take two parsers pa, pb. take_right runs the input through first

parser and then runs the remaining input through second parser, returning only

the second parser results. take_left does the opposite. Runs the input

through first parser, then through the second parser and returns first parser

results. These two parser wrappers are very handy when dealing with white

characters, similarly as optional parser.

Towards parsing INI files

I’ve got all the necessary building blocks now. I’ll now combine them to parse the Ini file syntax. Ini file is comprised of sections. Each section has a name and a set of key value fields. The syntax can be described the following way:

|

|

I’m gonna start by parsing <KEY_VALUE> first and build the complete parser

bottom-up.

|

|

There it is. The code is literally self documenting so there’s no point in discussing the parser whatsoever. Suffice to say that the parser returns key-value pairs as list of tuples. This is one building block, now a way to parse a list of key values is needed. It happens to be even easier than parsing individual key-value pairs:

|

|

Here, I’ve introduced one small wrapper which reduces any parser’s output to a single result. Most of my parsers return only one results apart from the recursive ones which return a concatenated list of partial results which I don’t really care about.

Believe it or not but the Ini parser is almost complete. Now, the section

header parser:

|

|

Pretty simple, just as the previous ones. Now, before I continue, I’m gonna introduce some types representing a parsed Ini file:

|

|

These are pretty simple. An Ini file is a collection of sections and a

section is a collection of key-value pairs with a name. I need two more

parsers for a section and a collection of sections. Here they are:

|

|

The parser is now complete! After feeding it some example Ini file:

|

|

…the output looks the following way:

|

|

Nice! I’ve got a working prototype and a parser combinators library which I can use to parse ANY format I want. But my overall goal is to solve this problem in C++. How difficult will it be now to translate a Python code, which I fully understand, into C++?

Towards C++ Ini file parser

I’m tempted to implement a parser as a virtual interface template, something like this:

|

|

This is a bad idea though, the amount of work required will be truly disheartening for no good reason. Since parser combinators are an idea from a functional programming world, it’s best to stick with a function-based interface. I’m gonna define the parser as:

|

|

There’s this mysterious Result<A> type. What’s that all about? Parser can

either succeed or fail and this is indicated via a return type. Originally, in

Haskell, the semantics of a Parser was that an empty result list indicates a

parsing failure. I’m gonna change the approach slightly in C++. I’ve very

rarely had a need for multiple than one result returned from any given parser.

To simplify the Result type in C++, I’m gonna drop the results list and

always return a single result. How will I indicate a failure? Exceptions!

Have a look:

|

|

Simple stuff. In case of parser error, retrieval of the parsed value will throw an exception. Let’s have a look on the first basic parsers in C++:

|

|

I’m not gonna show all the code here. I’m gonna focus only on the remaining

most important part of any parser combinators library which is of course the

bind operations:

|

|

Of course, I’ve implemented the same INI-file format parser in the examples

directory of the repo as well - I encourage you to have a look.

Conclusion

That’s it! Parser combinators is a very neat concept. There’s a bit of a learning curve if you don’t have a background in functional programming - but nothing too drastic. Additionally, as even the paper title implies, the implemented parser combinators library allows for monadic composition - but that’s a topic for a completely different post.

All the code is available on my gitlab in the following repositories: