Is 'rope' data structure still any relevant in modern applications?

Ropes are an alternative approach to representing strings within memory but do they still hold any strong hand nowadays and if so, why aren’t they as widely adopted as classical character strings? This is something I’m gonna try to explore using a simple C++ implementation and a couple of benchmarks.

What are ropes?

Rope represents a string in a tree-like fashion. Leaf nodes store the actual characters, while internal tree nodes store weights (mostly). Wikipedia has a pretty nice article, which I encourage you to familiarise with.

Additionally, this classic white paper details ropes as well.

What are the advantages over character strings?

The main selling point is concatenation which is O(1). Additionally insertion/deletion happens in O(log n). This would make it a perfect candidate as an underlying data structure to implement text editor buffer but aside of that, what else can it be used for?

Applications

One thing that comes to mind are string builders. Golang has

strings.Builder, C++ has the std::stringstream. Both of these provide much

more functionality than just plain boring string concatenation (like arbitrary type to

string conversion etc) but I’m gonna focus on most simplest use-case - let’s

explore if ropes perform any better when used just for string concatenation

than plain old std::stringstream.

What am I gonna test?

Custom implementation

I always had a soft spot for rope data structure so, I can’t resist but to implement one myself - of course this will be a very limited implementation and will have many caveats but should be good enough as a proof of concept.

You can find my repo, with all the details here.

Boost.Text

Boost.Text is an implementation by Zachary Laine which aims to bring in more robust support for UTF-8 to C++ but aside of that, it brings in an implementation of a set of interesting data structures, one of which, amongst many, are ropes. Boost.Text is meant to undergo proposal as an addition to boost libraries. This is very exciting! There’s a good chance C++ will have a production ready implementation of ropes available to everyone (which would make C++ quite unique in that regard amongst other languages).

Boost.Text deserves a separate post of its own, which I may write, since it packs a lot of interesting features but at the moment, this is outside of this post’s scope. I recommend going through the official documentation available on project’s github pages.

Advantages

The rope implementation within Boost.Text offers a lot more features compared to my simple implementation. To name a few:

- Copy on write

- Proper Unicode support

std::stringreferencing- thread safety

In fact uencoded_rope implementation is a lot more complex and resembles

more of a BTree. More can be found in the official

documentation.

Custom implementation

For the sake of this comparison, I’ve implemented a rope, following the documentation on Wikipedia. I didn’t try to focus on any particular production application, nor did I try to optimise the code and make it production proof in whatever shape or form. My implementation is rather straight forward without too much thought to it.

I’ve got a common interface for nodes:

|

|

There are two types of nodes:

leaves- these actually contain the string charactersinternals- these glue the nodes together in the tree

I won’t go into much details as the code is quite short and easy to comprehend so, if you’re interested, I encourage you to have a look here.

Benchmarking

This is just a very rough, back of the envelope comparison to see if it makes sense to resort to ropes at all. Therefore, the presented implementation and the comparison itself should be treated with a pinch of salt and by no means whatsoever, is meant to resemble any solid scientific analysis.

Right, that being said, here’s my benchmarks:

|

|

Since the benchmarks rely on Boost.Text, I’ve kept them on a separate branch within the repo.

Quick word about what’s going on here. The first benchmark parameter:

const std::size_t cnt = state.range(0);

… defines the length of the string that will be appended. Additionally, the

string s definition is wrapped with pause/resume timing statements. I want

to measure the concatenation time purely and the string allocation is

irrelevant, hence I decided to exclude it from the measurements. Here’s the

results:

|

|

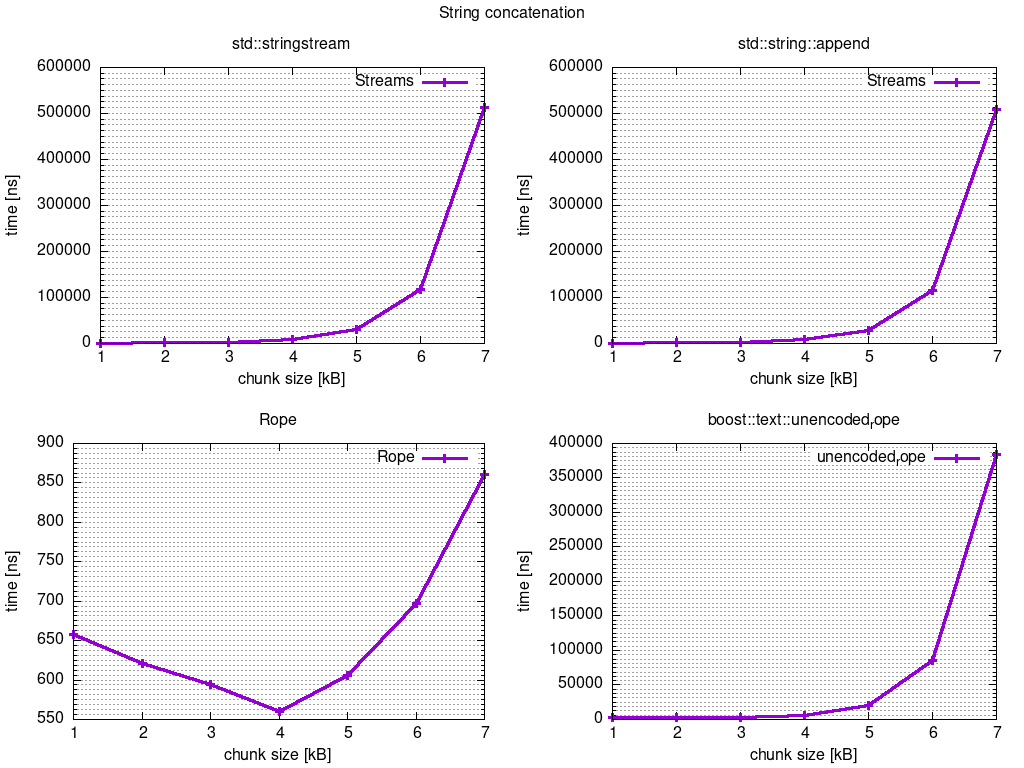

It’s visible from the above, that std::stringstream has just a small overhead

over bare std::string::append. The append time grows proportionally with the

chunk size both in case of using std::string::append and std::stringstream.

This is expected, as copying data can’t be avoided.

It’s a completely different story for my simple rope implementation. The measured time remains relatively constant, independent of the chunk size.

I can’t really comment regarding boost::text::unencoded_rope - the underlying

data structure is a lot more complex. Potentially, there’s tree rebalancing

happening at some point, which causes the time complexity to be dependant on

the chunk size. Maybe the nodes are restricted to a certain number of

characters and that inflicts additional performance penalty. Still though,

almost twice as fast as conventional std::stringstream or std::string.

Here’s how it looks plotted on a graph:

Conclusion

Standard streams and std::string::append on their own, are perfectly

good solution as a string builders when dealing with small strings.

However, it’s perfectly visible from the above comparison, that using

ropes, even in their simplest form may yield significant advantages

when the chunk size grows. The advantages are quite obvious for chunks

bigger than 1K when, in case of a simple rope implementation like the

one discussed in this post, the only cost when appending strings is

related to memory allocation of a constant size internal rope node. To

conclude, use cases for ropes are therefore quite limited, still

though ropes are a relevant data structure that could be a very

attractive alternative for a specialised set of applications.