Using SSL for services running in your home network

In this post I’m gonna discuss how to generate SSL certificates for services hosted within your home network. This can be anything, your plex or jellyfin instance or any development infrastructure you might be hosting locally. Keep on reading if you’re tired of HTTP and “untrusted website” warnings from your browser.

The plan

I’m gonna use navidrome as an example for the purpose of this post. It’s a music player typically running on port 4533.

Having to rely on an IP address and the port number is annoying so, first let’s

come up with a name for the machine hosting the service - huginn.home.arpa.

huginn.home.arpa is a raspberry pi running in my network that hosts the service.

I’m using home.arpa as this is recommended by rfc8375.

I recommend setting up a local DNS server to make things easier - this is out

of scope of this post so, I’m gonna add huginn.home.arpa to my machine’s

/etc/hosts to point to the mentioned raspberry pi (192.168.0.201).

|

|

Good. Now huginn.home.arpa resolve to 192.168.0.201. This is still less

than perfect as I need to use the port number explicitly:

huginn.home.arpa:4533. I’ll need a reverse proxy to remedy that and forward

directly to port 4533. You can run that reverse proxy wherever you want. To

make things simpler let’s assume it runs on the same machine as navidrome itself. I’m

gonna use nginx and the following configuration file under

/etc/nginx/sites-enabled/huginn.home.arpa.conf.

|

|

With that in place, it’s possible to just use navidrome.home.arpa to access

navidrome.

That works for plain old http, what about https?

The domain

During TLS handshake, an asymmetric cryptography is used employing server’s public and private key to establish a common secret that is going to be used for symmetric cryptography for the rest of the TLS connection. The client can verify the server’s credentials using its public key certificate as well. This is done using a certificate chain. Usually, a bundle of globally trusted certificate authorities is distributed with your operating system. Client, when inspecting the server’s public key certificate, tries to determine the certificate authority (CA) that signed its public key. If the CA is trusted then the server’s certificate is trustworthy and the connection is secure.

With that in mind, to be able to generate any keys and certificates for the domain, the domain itself has to be public. The way it’s obtained is out of scope here. You can buy a cheap domain on offer (usually with first 12 months free) or you can just use something like duckdns. The bottom line is that you have to be in control of the domain and be able to point its A and AAAA (optionally) records to your router’s IP.

For the purpose of this post, I’ve created twdevlab.duckdns.org on

duckdns and pointed it to my router’s IP. This is temporary

only and the domain doesn’t really have to point to any valid address once

you’re done with certificate generation.

Port forwarding

I’ll be using certbot to generate my free letsencrypt keys and

certificate. To be able to do that, I’ve forwarded port 80 and 443 on my

router to 192.168.0.201 (the raspberry pi running both nginx reverse proxy

and navidrome). Again, this is only temporary. In case you’re wondering, by

the time you read this, the domain should already be parked to 1.2.3.4 and

port forwarding on my router is turned off so… don’t bother :).

Let’s encrypt

SSL keys and certificates can be obtained automatically using certbot. First

install it.

|

|

Run certbot.

|

|

We’ve got the certificate and the keys! Additionally, the default nginx server configuration has been updated to use it - this is what I wanted as I don’t like to add these entries manually.

Now, re-run certbot again like so.

|

|

I’m requesting a wildcard domain certificate here so, effectively, all

subdomains of twdevlab.duckdns.org will be able to use https connections.

This is great since I only need one certificate for the reverse proxy that I

can reuse locally for as many services as I require.

But to make that work, certbot is requesting me to edit TXT record for my

domain, to prove ownership. This varies wildly depending on the DNS provider

you’re using for your domain. In case of duckdns, you have to visit a

specific URL, as described in TXT Record

API,

to populate the TXT record.

Once that’s done, the only thing remaining is to update the reverse proxy configuration.

Reverse proxy configuration

certbot edits nginx’s default.conf. Let’s move huginn.home.arpa.conf to

twdevlab.duckdns.org.conf and add some entries from default.conf to it. The

file should like so.

|

|

Additionally, since there’s no local DNS in place, I’m gonna add

navidrome.twdevlab.duckdns.org to my /etc/hosts:

|

|

It’s now possible to test this configuration, like so.

|

|

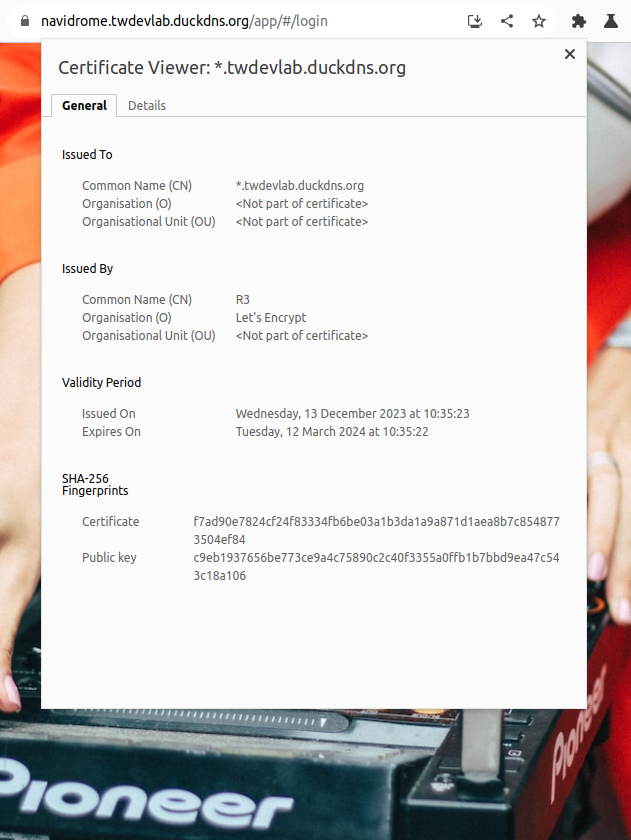

Visiting navidrome.twdevlab.duckdns.org will yield a working https connection

with a valid public key certificate.

Next steps

I can now have subdomains for all my LAN services like

proxmox.twdevlab.duckdns.org, portainer.twdevlab.duckdns.org and so on.

With the generated wildcard certificate all of that will work with TLS, which

is terminated on the reverse proxy.

Use local DNS

For the purpose of this post I’ve used /etc/hosts file to resolve the

subdomains locally but, in the long run, this is simply not gonna work as such

configuration would have to be duplicated on all hosts.

In my network, I’m using pihole as my local DNS - this is advertised by my DHCP server to all my clients. Local DNS server becomes a necessity once the number of devices grows within the network. This is something everyone should consider, especially, when managing subdomains for wildcard SSL certificates.